When designing, developing, and testing digital products, applications, and web pages, we make a million decisions a day as we try to achieve the outcomes we seek. We base these decisions on our experience, industry best practice, intuition, and creativity. But with data-driven decisions used for optimizing digital products for best performance, A/B testing is an important tool to have in our development toolboxes.

What is the A/B testing process?

A/B testing is a user experience research methodology that allows testers to experiment by comparing two versions of a single variable. Also known as “split-run” testing, this method provides measurable feedback on the performance of the digital products we create. Ambiguity, hunches, and guesswork can be discarded to allow data-driven decisions. These decisions help optimize products to be more effective to create better user experiences for our users.

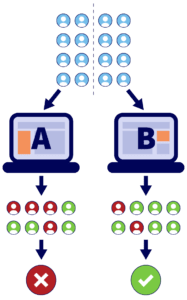

Experiments are run to achieve a single desired outcome using two distinct methods or variations, which attempt to achieve the outcome. Users interact with both the A and B versions of the product, and measurements are made to assess which version comes closest to delivering the best outcome.

Once a clear winner has emerged from these first two choices, it is common to retest more times with a new A or B version introduced to assess if the previous winner is still the best choice.

Test just one element at a time, because directing traffic and website visitors to two entirely different page designs, with different graphics, headlines, and calls to action, will deliver unusable results. Feedback may suggest that one page is more effective than the other, but you will not know which element is the reason for the positive results, and which aspects, if any, were disliked.

A/B testing is regularly used across all digital platforms to optimize conversion rates, dwell-time, and engagement with apps and websites. Here are a few examples where you may wish to A/B test and how A/B testing has been successfully used to improve performance:

A/B testing for email campaigns

A/B testing is commonly used in email campaigns because the visitor journey is usually direct and linear. The measurable outcome is most commonly the count of Call To Action clicks, which is easily defined and measured.

For example, a company may have an email list of 10,000 people, and they want to drive traffic to an offer page. The company extracts 2,000 people from their list for this experiment. They send Email A to 1,000 people and Email B to the other 1,000. Both emails are identical in their design and content, except for a slightly different Call To Action button.

- Email A says, “Offer Ends Soon.”

- Email B says, “Offer Ends This Friday!”

This hypothetical experiment indicates that email B was more effective than the Call To Action in email A. From this experiment, the data-driven decision can be made to send the remaining 8,000 in the email list email B because this proved to be more effective.

A/B testing for ad copy

Like the Call To Action emails test above, a useful way to discover how effective your straplines, offers, and marketing copy is, is to use the A/B testing methodology.

You probably have a good grasp of how this works by now, so I won’t go into too much detail. When setting up display ads, or Google Ads, it is worthwhile creating more than one version of your adverts. Doing so can help you figure out which version resonates the most with your audience. The set-up process for Google Ads campaigns allows you to create multiple versions of an advert, effectively carrying out A/B testing on your behalf. The experiment supplies data that shows which receive the most clicks.

The headline length can be tested, the tone of voice, and any specific offers you wish to promote, to learn which generates the most interest.

A/B testing for button and user interface design

It’s not only text that can be A/B tested; visuals and graphical user interfaces are elements that will allow split testing. Testing a variation of button colors on a page, or within an ad, to see which captures the eye, can be indicative. This way, you can find out which drives the most traffic to your website and find out the best placement and positioning of these elements.

A/B testing for images

We know that a picture speaks a thousand words, so finding the best photograph or illustration for your campaign can make a difference when it comes to optimizing for conversion. You could experiment by testing two different images on the page or advert, keeping every other aspect the same, and learning which one resonates most with your audience.

A/B testing your opt-in forms

Capturing people’s attention and driving traffic to your landing pages is essential, but getting your visitors to act is what it’s all about. When optimizing your landing pages for success, attention needs to be paid to points that invite interaction from your visitors. These sections’ job is to convert website visitors to leads and work for you as successfully as possible. This is where A/B testing your opt-in forms can make an impact.

There are a few areas concerning form design that you may wish to test, and these include:

- The form’s placement on the page – Try a version with the form at the top of the page and one with the form lower down.

- The form label alignment – The positioning of the field labels matter because they dictate how your visitors’ eyes move across the page. Spacing and positioning can affect how easily users can sign up.

- The number of form fields – Depending on your industry and audience type, the number of form input fields that make up your opt-in forms will need to be considered. A general UX rule is to reduce users’ workload and remove any superfluous entry barriers, so having a minimum number of fields is generally a good thing. It can be useful to test a paired down version of your form, and another which includes additional fields, so your marketing team can benefit from better lead data for their CRMs.

How much data do you need?

A/B testing is most effective when you have a large data pool to draw from. It is not usually worth carrying out a sophisticated A/B testing process with a pool of about 16 or so people taking part in the experiment. While a low number of participants would give an initial indication, an increased number of users engaging with your product will provide a more accurate and reliable dataset. Upwards of a thousand visitors or users will provide reliable trends and workable data.

It’s also worth running A/B tests for a minimum of 14 days to ensure that various factors can be accounted for that may be outside the scope of your experiment. These may include user behavior affected by the day of the week, time of day, or other events that can impact the data results, so skewing things. So the longer you can run your test, the more normalized the results, and the clearer trends may become.

In summary

Knowledge is power. A/B testing various aspects of your digital products, and any marketing campaigns or landing pages, will supply data so you can optimize and improve performance.

Useful insights can be gathered when you know what to test, giving you a clearer understanding of the outcomes you want to achieve. These will help you to make data-driven decisions to increase your chances of success.